How our Leicester SEO helped iSmart become the most profitable company in Northamptonshire

In 2015 we helped a Northampton-based finance company become the most profitable business in the region. And we worked our way onto on Grant Thornton’s top 200 list using our incredible Leicester SEO powers.

Working with iSmart on SEO, PPC, affiliate marketing and Direct Marketing, we jointly created some best-in-breed campaigns. Of course, not everything worked out. But by working closely we were able to turn their marketing campaigns from a shotgun to a sniper approach which delivered an unrivalled CPA.

CPA

Cost per action, or CPA – sometimes referred to as cost per acquisition – is a metric that measures how much your business pays in order to attain a conversion.

The commercial director had this to say:

dapa have helped put our business in top 5 for profit growth in East Midlands and 1st for profit growth in Northamptonshire for 2015. These guys are the 1% you are looking for…

We will get back to you ( normally within 10-30 minutes )

We will get back to you ( normally within 10-30 minutes )

Anyway, tell me more about your Leicester SEO…

SEO, a dark art? A mysterious technical process? An expecto patronum spell? No, just hard work and time.

As business owners or managers, you’ve probably heard SEO services come in many forms of white, grey and black to name a few.

Many have tried it themselves, hired internal specialists and employed external agencies with varying results. So, we decided to throw the process of SEO upside down and deliver the results our clients will shout about from the rooftops.

If you’re looking for a Leicester SEO agency that will work with you to meet the goals of your business, get you ranking, traffic and more customers, give us a nudge and we’ll call you back.

Who are you and why should I trust you?

Leicester isn’t our first home. We’ve developed the biggest team in the region in our head office, based in Northampton.

That has enabled us to establish ourselves quickly in Leicester. Most importantly, we’ve got the ability to rank on Google, which is how you found this page we assume?

Not only does this mean we can get people like you to find us on Google but it means our clients will talk about us too. So, a little ‘about us’ while we’re on the subject.

One fine day there was a flourishing finance company, growing fast, with a young whipper-snapper working in the marketing department focusing on SEO.

That company went from forty staff to six-hundred within a year or two and became the most profitable business in the Midlands with great help from its tremendous rankings.

Fortunately for you, the young guy, now twenty-four or so, wanted to own something for himself and parted ways with the then market leading firm.

Three or four years later and millions of pounds spent on unsuccessful search campaigns, the owner of the finance company needed that SEO specialist to help him build once again.

So, together, they created a business to deliver what they both wanted. Now, that business is known as dapa and this is our Leicester SEO branch.

Not quite a fairy-tale. But the difference between the way we approach SEO, and our counterparts, is that we do it for the love. We are great at it and we started in this industry to help others achieve online success.

Enough about you. How can you help me?

Would you ask your web agency to help with your laundry? No. We only do SEO. And I do mean it, if you’re looking for a fancy logo or a new website then we’re going to say no I’m afraid.

SEO is a specialist practice, it’s not a cheap add-on or something a web designer should be doing.

Not because they wouldn’t be great at it – they may well be! But because it’s not their game, it’s not their focus and it’s not their priority.

75% of Google searchers never scroll past the first results page, so we’ll make sure we get you there. If you’re still reading I’ll assume it’s more leads from organic traffic SEO you actually want, so let’s get on to that.

Free SEO Consultation

Come in for a coffee or schedule a conference call with one of our leading UK specialists.

Get Started Now!What Actually Is This Leicester SEO Stuff?

On September 4th 1998 two students changed the world. Segey Brin and Larry Page created Google, the beast we know and love today has evolved many times over the past 18 years (wow – that long? – I feel old now).

Today, 93% of user behaviour starts with an online search. Though big G has evolved beyond recognition almost, the world of SEO hasn’t.

Believe it or not the principals of search (at least in our eyes) have never changed.

To understand that, all you have to do is understand Google’s only job. To give the searcher the result they want or need. That’s it. That one small job made Google a company with a turnover of $100bn in 2016.

The world’s biggest middle man.

So, all we have to do is ensure our website (or our clients’) tops the search results page. Simple right?

Unfortunately, not. SEO is the process of utilising hundreds of key factors that Google wants to see from a market leading website such as yours.

Some websites have the ability to do that more than others, and that’s where our expertise comes in. Basically, ranking is a popularity contest between you and your competitors. If Google believes your website is more popular than theirs, you’ll rank higher.

After all, why wouldn’t they suggest the website that most other people like? If your friend needed their boiler fixing, you’d recommend a reputable engineer, not some obscure randomer.

How does SEO work?

Before we give away our trade secrets it’s important to understand one huge element of SEO. No-one, and we mean no-one, knows 100% how Google works.

To put that into some perspective, if you hired a salesperson, would you know everything about them? Their schedule management, workload, appointments, work process, results, motivation, family issues, what time they’re going for lunch?!

Not to mention you’re probably busy doing other jobs, rather than spending all day brooding over how Google works. Google employs 60,000 people worldwide and has over half a million servers.

As experienced Leicester SEO specialists it’s our job to form our own conclusions, based on experience, testing and constant improvement. That’s what SEO is about and that is what you’re paying for.

Onsite SEO, ticking the boxes

Within your website’s theoretical four walls is everything we class as onsite SEO. Your website itself makes up about 20% of the factors that affect your rankings.

Some Leicester SEO firms will have varying numbers for this, maybe ranging from 5-50% but remember, a lot of this is based on experience so it will vary.

A few things that affect your onsite SEO (without being too technical):

- The amount of times your product/service is mentioned on the pages.

- The position of those words on the page.

- The amount of text vs code contained on each page.

- The URL (site.co.uk/landing-page).

- The Title tag (Top blue line on Google)

- The meta description (the text below the blue line above).

- The filenames of the images you’ve uploaded.

- The load time of each page.

These are a few basics of onsite SEO tactics. To be honest, there’s over a hundred more factors that need to be considered when sculpting every page on your website. It’s massively important to realise that onsite SEO can only get you so far.

Your website will not rank for even mildly competitive searches without links. Many Leicester SEO agencies shy away from building links, so make sure this is part of your campaign before you get started to avoid disappointment.

Link Building, crafting those SEO webs

SEO is a big popularity contest. Offsite (or link building) SEO creates the ‘votes’ Google wants to see in order to decide how popular your website is. The 80% of SEO that is missing, I can hear you wondering, is link building.

Again, many will tell you this number is much higher or lower. In our experience at least, creating links is key to dramatically change your ranking.

Notice how we said change, not improve? Link building can of course be done badly, very badly indeed.

This is the reason a lot of SEO companies have stopped building links completely. We’ve been involved in everything from pure white, off-white and completely black hat link building in our past.

We believe it’s important to understand and spot the dangers of the dark side of the moon.

A bit like taking your car on a skid-pan session, find your limits. A lot of offsite SEO now is done through content creation, PR, blogging and creating pieces that others will link to, effectively doing the SEO for you.

What we have created, through our extensive link building expertise, is a balance between content creation and ‘seeding’ or ‘spreading the word’ about the content we create, i.e. Link building.

Coupling great content with all the right signals Google wants to see is the absolute priority here. Without both, the content (your website) will not rank.

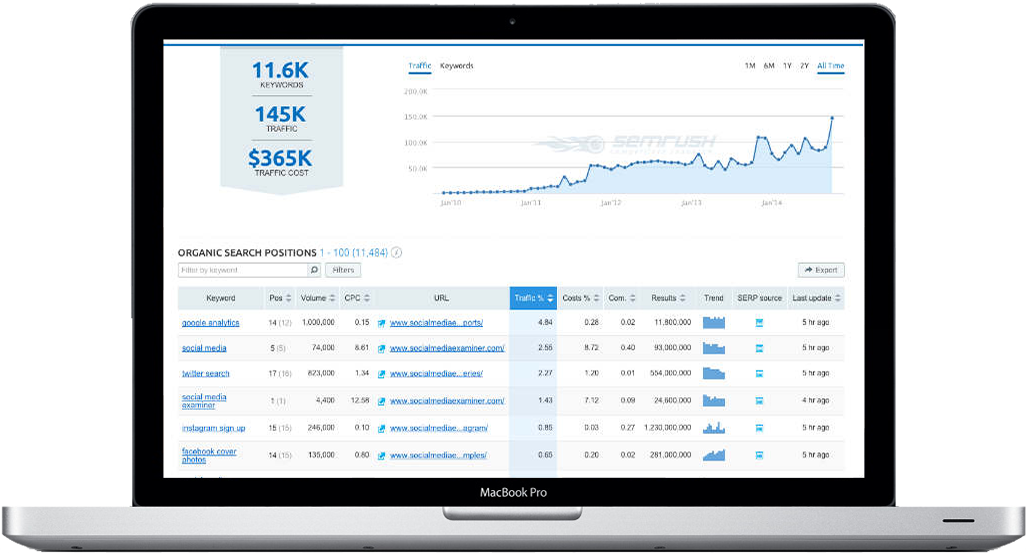

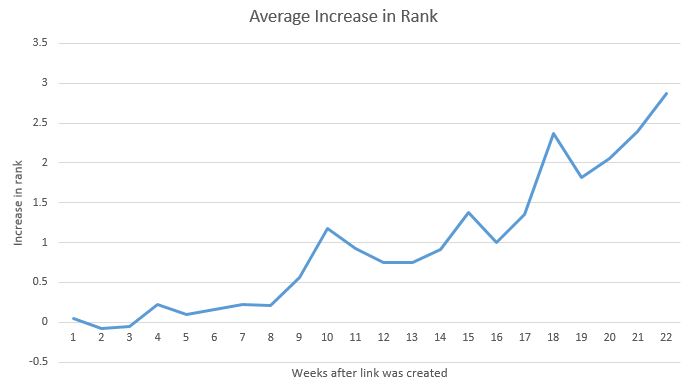

There’s some great examples of how links can bolster a website in our case studies, but here’s a lovely pic to illustrate that point.

As you can see below, our onsite SEO kicked off in May. Then as we moved in to June and July our link building efforts started to flourish. Effectively onsite SEO gained us around 4% exposure on the market. Not bad at all but could be better.

Our link building took us to a peak of 27% exposure across all 104 of our keywords and an average rank of under 2 (position 2 on Google).

Our link building took us to a peak of 27% exposure across all 104 of our keywords and an average rank of under 2 (position 2 on Google).

These weren’t your run-of-the-mill long tail keywords either, we’re talking ‘product in location’ where the product was a mainstream financial service. Link building is essential but must be done correctly alongside SEO driven content.

Does my website’s content affect my ranking?

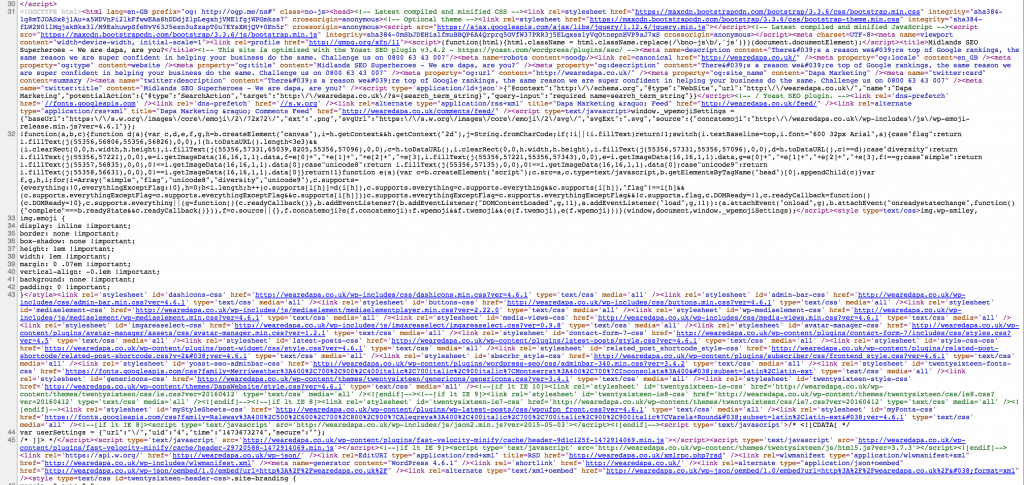

Google has a utility we will call a ‘spider’. A bit like real spiders, it crawls through websites, gathering and analysing information, reading code and words.

Establishing the right balance is key. The ‘spider’ that Google sends around your website and billions of others each day “reads” everything contained on your webpages.

The image below is what it sees:

Through the words you display on your pages the spider will attempt to understand what you’re actually talking about.

Displaying the right information in the right way enables Google to understand and thus rank the website for the keywords you’ve told it to. For example, this page, we want it to appear on search engines for people when searching “Leicester SEO”.

So, zoom out on the page. Press “control + F” on your keyboard, and then type Leicester SEO in the find box. It will highlight all the times we’ve used that term on the page, which is quite a few times as you can see. Couple that with:

- The URL being /Leicester-seo

- The title and description mentioning “Leicester SEO”

- The headings on the page containing the same

This builds up the whole story, it gives Google the knowledge it needs to understand the page’s subject and relevance. Because we know our stuff, hopefully it will rank it well.

To start a Leicester SEO ‘story’ your website needs to display the same criteria as well as other factors to rank each page effectively.

How do I go about finding a Leicester SEO company?

I mean, if you do decide to go down that route, we’re literally right here.

If not, that’s fine, we’re happy as long as you get what you pay for. And we’ll give you some tips to ensure you get the best value for money. Here’s how to decide who you should choose and why.

There’s several ways to go about this. But who you’re looking for is someone who can deliver rankings for your budget and requirements. SEO consultancy isn’t cheap. It takes time, so the first thing to rule out is anything that is cheaper than a couple of hundred pounds per month.

If you can’t afford to invest that amount then SEO really isn’t an option for your marketing, move on, don’t waste your money.

Try your best to avoid those ‘top 10 agencies’ lists. Often these are paid-for privileges and not necessarily a guide of quality.

SEO takes time, so the last thing you want is to get on a mate’s rates tariff and end up being last in line to get the work each month because you’re not spending much.

It happens a lot. Our major tip on choosing a Google SEO agency is to go local and search in your area. Leicester, I assume, will be your choice so here’s what to search in Google (just copy and paste without the inverted commas): “SEO Company in Leicester” or “Leicester SEO Agency”. If you’re looking up a certain agency and they’re not in the first few results, move on.

If you’re looking for a provider then choose one of the top three on the list, start with those that grab you and enquire using their online forms.

Go with those that get back to you quickly as long as the price is right. If they can’t answer their own forms quickly and create an efficient process for doing so, how will they run your campaign effectively?!

What Will SEO Cost Me?

How long is a piece of string? The cost of SEO is dependent on two things – the competitiveness of your market and your ambition.

Generally speaking, a small, local independent service provider will be at the bottom end of the scale. An estimated budget would be around £200 per month.

At the top-end would be a national financial product like loans or insurance which could warrant SEO optimisation budgets of £10k per month plus.

Somewhere in the middle would be your larger local businesses and niche national products/services with an estimated budget of around £1-2k per month.

Your SEO campaign should always be based on your individual goals. You shouldn’t be pigeon-holed into a particular package and be wary of paying too little.

SEO takes time, it takes resource and that costs money. If you’re on a very tight budget and timeline, then using an SEO agency might not be for you.

Free SEO Consultation

Come in for a coffee or schedule a conference call with one of our leading UK specialists.

Get Started Now!What’s the minimum I should spend?

There’s lots of ‘off the shelf’ SEO packages out there designed for mass-market audiences on a budget. This isn’t particularly great. You need a bespoke campaign. That’s definitely a major part of our philosophy at Leicester SEO.

Earlier we discussed how no-one really understands SEO 100%. Now imagine what happens when someone with only a basic competence level starts to build packages for clients.

It can go both ways. You could end up with hugely overpriced campaigns that don’t work. Conversely, automated, hands-off and cheap packages can actually harm your website.

Staying away from those schemes can be difficult. It’s tempting, because as businesses we’re always looking to minimise expenditure and maximise income. Briefly, here’s a quick guide of what to avoid:

- Any form of SEO costing less than £100 per month.

- Yearly packages for a one-off fee.

- Prices based on numbers of links bought.

- Any packages that include paying for links.

Things to avoid in the topsy-turvy world of SEO

We appreciate that SEO isn’t as transparent as many other services, so here’s a quick guide for how to choose the right SEO company in Leicester.

Things you should look for:

- Campaigns that contain an emphasis on link building, content and outreach.

- Companies that rank their own website well in their own region.

- Agencies with clients in Leicester that rank well too.

- Located nearby and will come and see you, or vice versa.

- Someone who answers the phone and helps with your questions.

- Agencies that give you a target rank, traffic or lead KPI.

- Most importantly, someone who has a history of success with relatable businesses.

Now, things you should avoid:

- Any SEO strategy that doesn’t include link building in the proposal.

- Companies that do not rank their own website well in their region.

- Someone who charges less than £100 per month for anything.

- Someone who charges less than £1000 per month for a national keyword.

Here’s one final thought before we crack on with the full guide.

You remember we just told you how to find a reputable SEO company? How did you find this page?

Give us a shout using the form below.

We will get back to you ( normally within 10-30 minutes )

We will get back to you ( normally within 10-30 minutes )

If you’re looking at learning SEO for yourself, we’ve created this guide for you.

Search Engine Optimisation or SEO is an analytical, technical and creative process which intends to improve the visibility of a website in search engines.

The main objective of SEO is to direct more visitors towards your website which will ultimately translate into more sales for your business. These SEO tips should assist you in creating an SEO friendly website for your business.

Leicester’s best SEO company: how we can help

(I know we like to blow our own trumpet, but we really are quite good – promise!)

Basic, but effective white hat SEO strategies can assist you in driving more organic traffic to your website from a search engine.

Before we go further let’s try to understand what a white hat SEO strategy entails. White hat SEO refers to the use of optimisation techniques, strategies or tactics that are more directed towards the visitors of your website rather than focussing purely on search engines.

Black hat SEO refers to the aggressive use of optimisation techniques, strategies or tactics that focus solely on search engine rankings rather than the visitors of your website and in the process tend to disobey the search engine guidelines.

Black hat SEO might give your website some immediate success through improved traffic but, in the long run can get your website banned from Google and other search engines.

Penalties awarded by Google for failing to adhere to its guidelines can have a severe long term impact on search rankings for important keywords and traffic to your website.

So, it is best to steer clear of SEO techniques that can be described as ‘grey hat’ because you never know, what grey hat is today might be deemed black hat tomorrow, by Google. Next we’ll answer some common questions that people have when starting out in SEO.

What are the ‘rules’ for SEO?

Google emphasises the need for webmasters to adhere to their guidelines. And as a result, it rewards the websites providing high quality content and exceptional white hat SEO strategies to obtain high search engine rankings.

Google also punishes websites that are disobeying Google specified guidelines, even if these sites initially ranked highly.

While these ‘rules’ are not laws as much as guidelines, it is important to remember that these guidelines are laid down by Google. It also should be noted that some methods used to achieve higher rankings in Google are considered illegal.

For example, hacking is considered illegal in the UK as well as in the US. The decision to abide by these guidelines or to bend or ignore them rests with you- all providing varying levels of success or retribution from Google’s spam team.

What you will read here falls within the Google recommended guidelines and will assist in boosting traffic to your website through natural or organic SERPs (Search Engine Results Pages).

Definition of SEO

SEO entails getting free traffic from Google, the most popular search engine in the world.

Finding opportunities for your business

To understand the art of SEO it is important to consider two factors.

Firstly, with what intent will target audience harbour in their business search for queries? And also, what type of result will Google provide to its users when a particular search query is typed?

It is about assembling a lot of factors to create opportunities for your business. Any good optimiser should have a deep understanding about how search engines like Google generate their natural Search Engine Result Pages (SERPs) to satisfy any informational, transactional and navigational queries that a user might have.

Risk management

A good SEO marketer should have a strong grasp on the following:

- The short term and long term risks involved in optimising search engine rankings

- The type of site and content Google wants to return in its natural SERPs.

The objective of any SEO campaign is to create greater visibility for a website in search engine results. Now, this should be a fairly simple process if it were not for the many difficulties that lay in the way.

There are rules or guidelines that needs to be followed, risks to take and battles to be won (or lost) as part of an SEO campaign.

Free traffic

Ranking high on Google search engines is very valuable for your business. Think of it as free advertising for your brand.

Free advertising that is provided on perhaps the most invaluable advertising space in the world. Traffic from Google SERPs is still considered the most valuable source of organic traffic (traffic that your website receives due to an unpaid search result) for a website.

It can be the deciding factor in determining whether a website or an online business is successful or not. The fact is that it is still possible to generate free, highly targeted leads for your business by simply optimising the content on your website to be as relevant as possible for a potential customer who might be on the lookout for a product or service that your company provides.

It can be the deciding factor in determining whether a website or an online business is successful or not. The fact is that it is still possible to generate free, highly targeted leads for your business by simply optimising the content on your website to be as relevant as possible for a potential customer who might be on the lookout for a product or service that your company provides.

As you might have already guessed, there is a lot of competition today for that free advertising space, sometimes even from Google itself.

However, it is important to note that it is futile to compete with Google. Instead focus on competing with your competitors.

SEO: the process

The process is something that can be practiced anywhere, be it your bedroom or your workplace.

Traditionally it involves multiple skills such as the following:

- Website design

- Accessibility

- Usability

- User experience

- Website development

- PHP, HTML, CSS, etc.

- Server management

- Domain management

- Copywriting

- Spreadsheets

- Backlink analysis

- Keyword research

- Social media promotion

- Software development

- Analytics and data analysis

- Information architecture

- Research

- Log Analysis

- Looking at Google for hours on end

Yes, it takes quite a lot to rank high on Google SERPs in competitive niches.

Is user experience important?

If you expect to rank high on Google SERP’s in 2018, then make sure you provide your visitors with an enjoyable experience. Rather than focussing on manipulation or old school techniques to boost your rankings.

Does a visit to your website provide your users with a quality experience? If not, then it is better to start doing so or Google’s Panda algorithm will be out to get you and your site.

As Google keeps raising the bar for what it considers to be quality content year-on-year, it’s important to provide your users with a great experience when they visit your website. Not just with content but with pages that are easy to find, appealing multimedia and a sleek web design.

The success of your online marketing strategy can be dependent on the investment in the following:

- Higher quality on-page content on your website.

- Website architecture and its usability.

- Conversion to optimisation balance and

- Promoting your website.

What is a successful SEO strategy?

Simply put, relevance and trust are vital to the popularity of your website online. The tolerance of manipulation has passed. In 2018, SEO will be about adding useful, relevant and quality content to your website that meets a definite purpose and also provides a high quality user experience.

SEO is not for short term benefits. If you are serious about getting free traffic from SERP’s then be ready to invest sufficient effort and time into your SEO campaign.

Quality signals

Google will rank your website high if you are prepared to add high quality content to your website thereby creating a buzz about your brand. It might help you win backlinks from reputable sources, too.

If your SEO strategy revolves around manipulations or skirting around the boundaries of Google recommended guidelines, then the likelihood of being penalised by Google will be very high. Any penalty from Google will last until you fix the offending issue, which can sometimes take up to years.

For instance, backlinks are weighed ‘far too’ positively by Google to drive a website to the top position of a SERP.

That is why black hat SEO users do it, as it is the easiest way to rank a website on a search page. If you intend to build a brand for your business online, then it is important to remember that you shouldn’t use black hat methods.

Why?

Because, if using black hat SEO methods has got you a penalty, then merely ‘fixing’ the problems that are in violation will probably not be enough to recover the organic traffic to your website.

Recovering from a penalty is as much a ‘new growth’ process as it is a ‘clean up’ process for your website.

Google rankings constantly flap about

Google updates its index of websites once every month. However, since 2002, Google search results started changing in between updates.

This continuous change of search results came to be known as ‘ever-flux’.

Remember, it is in Google’s best interests to make SERP manipulation as difficult as possible.

So, every once in a while, the people at Google responsible for the search algorithms modify the rules a wee bit. Not only to improve the quality standards of the pages that contend for the top rankings, but also to ensure that the pages stay updated and relevant.

Just like the Coca-Cola Company, Google likes to keep its ‘secret recipe’ under lock and key. Therefore, they might sometimes offer advice that is helpful or in some instances offer advice that is just too vague to configure.

Some advice might just be to misdirect SEO optimisers from manipulating search engine rankings into their favour.

Google has gone on record to say that their intent is to frustrate SEO optimisers who look to increase the volume of quality traffic to their website, through the use of low quality strategies which are classed as web-spam.

However, at its core SEO is still about the following:

- Keywords and quality backlinks

- Reputation, relevance and trust

- Quality of content and visitor satisfaction.

The key to creating a SEO strategy that works is by making sure that you provide your visitors with a great experience.

Authority, relevance and trust

The key aspect of optimising a webpage includes ensuring that all the content on that page is trusted and relevant to rank for a specific search query.

It is all about ranking for keywords on merit over a long period of time and not through manipulation of Google specified guidelines.

To do so, you should follow the white hat rules laid down by Google which strives to build the trust and authority of your website (and brand) naturally over a period of time.

Depending on the route you take for the SEO of your website, remember that if you are spotted by Google for using manipulative SEO techniques, then your website will be classified as spammy and as a result you will be punished for it.

If your website has been penalised, then sometimes it can take years to address the violations and overturn the penalty.

Simply put, Google does not want anybody to manipulate their search rankings easily as it can be quite a dampener on their own objective of having you pay them for using Google Adwords.

Ranking high organically on Google listings can be viewed as real social proof for a brand or business, a way to steer clear of PPC (pay per click) costs and also the best possible way to drive valuable traffic to your website.

The objective of doing SEO is making sure that you rank high organically on SERPs without incurring any additional expenditure.

Is user experience an important factor?

When Google released its quality guidelines, the phrase ‘user experience’ is mentioned in its about 16 times.

However, Google maintains that user experience is not as yet considered a’ classifiable ranking factor’ for desktop searches at the very least.

For mobile searches, it is a whole different ball game as UX (user experience) is usually the basis of any mobile friendly update.

Even if UX is not considered a ranking factor, it can be handy to understand what exactly constitutes as ‘poor user experience’ in Google’s eyes because, if your website contains poor UX signals which have been identified by Google, then you can be sure of the fact that it will affect the rankings of your important keywords.

What constitutes a bad user experience?

For Google, rating user experience for a website from the perspective of the quality guidelines revolves around marking the page down for the following:

- Website design that is misleading or potentially deceptive

- Sly redirects

- Spammy user generated content and harmful downloads

- Low quality main content or MC on the landing page of your website

- Low quality supplementary content or SC on any page of your website

What is supplementary content (SC)?

For a web page to have a positive UX, Google mentions the importance of using useful and functional supplementary content.

Supplementary content contributes to an improved UX on a webpage even though it cannot directly help a page reach its ranking objectives.

Webmasters create and use SC as an integral part of improving the user experience of a webpage.

A common example of SC is inserting navigational links that help your visitors access other parts of your website. In some instances, the content behind the tabs are also considered as part of the supplementary content of that page.

Not having SC on your webpage or using SC that is not helpful to your visitors can contribute towards a ‘low quality rating’ for your webpage.

This low quality rating is also dependent on the type of website and the purpose of the page.

Small websites that usually exist to serve their communities are judged by different standards when compared to larger websites that have a number of webpages and content.

For PDFs or JPEG files no SC is needed or expected. It is important to remember that no amount of SC can help you if the main content (MC) on your webpage is of poor quality. Important points about SC:

- Supplementary content can be largely responsible in making a high quality page more satisfying for a visitor.

- Your SC should be relevant and most importantly it should ‘supplement’ the MC on the webpage.

- Smaller websites might not require using as much SC on their webpage as compared to large websites.

- A page can still be ranked very highly on a Google SERP without using any SC.

Google expects websites representing large companies or corporations to devote a lot of time and effort into creating a great user experience for visitors with the help of relevant SC.

On such large websites, SC may be the main way through which visitors can explore the site further and gain access to MC. Such websites also tend to be content intensive. Therefore, the lack of helpful supplementary content can lead to a poor rating.

If it becomes difficult for a visitor to access the MC of a website because of deliberately designed ads that intend to distract the visitor, it affects the overall quality of user experience of that page which could be a reason for a low rating.

Despite the importance of SC, Google is considerably more interested in the MC of your webpage and the reputation of the domain on which the page is on, with regards to your site and also competing pages from alternative domains.

Targeting conversions with user experience and usability

Consider pop-up windows as an example: Jakob Nielsen, a renowned usability expert, stated that 95% of web visitors were annoyed by unnecessary and unexpected pop-up ads, especially the ones that contained unwelcome advertisements.

Pop-ups have been consistently voted as the most hated advertising technique used online. Web accessibility students would probably also agree on the following:

- A new browser window should only be created if the user wants one.

- Pop-up windows should not mess up the screen of a visitor or a prospective client.

- By default, all links should remain open in the same window. However, a possible exception to this can be if one of the pages opened contains a list of links. In such a scenario, it is advisable to open the links in a new window as it allows the user a chance to return to the original page containing the link lists easily.

- Make your visitors aware that by clicking on something they could be about to invoke a pop-up window.

- Employing too many pop-up windows on a webpage can have a disorienting effect on the user.

- Preferably provide your user with an alternative to clicking on a link that could possibly open a pop-up window.

A pop-up window can be a hindrance to web accessibility, but the fact is it can provide an immediate impact and as a result it can be foolish to dismiss a technique that has a proven track record of success.

Also, there are very few clients that would choose accessibility over increased sign-ups that using a pop-up will provide for a website.

Generally, it is recommended that you do not use a pop-up window on days when you post a blog in your website as it severely affects the likelihood of your blog post being shared on social media circles by your readers.

Interstitials on a mobile site can be extremely annoying for a visitor and it also affects the user experience of that page. What is an interstitial?

It is the ad that appears when the original page you clicked on is still downloading.

Google announced that any mobile page that displays an interstitial which hides a high proportion of MC (main content) after November 2015 will not be considered as mobile friendly, as it significantly affects the user experience of visitors on that mobile web page.

Although, webmasters are still allowed to use interstitial banners of their own implementations as long as they do not block a large chunk of the page’s content.

If Google thinks that the use of an interstitial is severely affecting the user experience of your visitors, then it can be bad news for your rankings.

Mobile web browsers on the other hand can provide alternative ways to promote an app without affecting the user experience of a visitor on the page.

If you are still adamant about using a pop-up window, then instead look to insert one as part of the exit strategy of your page.

So, hopefully by the time a visitor comes across a pop-up window they are not annoyed by it, but instead intrigued by the quality of the main content on your page.

This can be a great way to increase the number of subscribers to your site with a similar, if not better conversion rate than pop-ups.

For any SEO optimiser, the priority should always be to look to covert customers without having to revert to techniques that affect their Google rankings negatively.

In your rush to boost your rankings or converting leads into sales, do not forget the primary reason why a visitor has landed on your page in the first place.

If you do so, then you might as well place a big target sign over your website for Google to ‘get’ you.

Free SEO Consultation

Come in for a coffee or schedule a conference call with one of our leading UK specialists.

Get Started Now!Remember, Google wants to rank only high quality websites

Historically, Google looks to classify sites in its ‘directory’ of sorts, sorting sites into categories. Now, whatever that may be for your site, you do not want your site to get tagged with a low quality label irrespective of whether it is put there by a human or a Google algorithm.

While human ratings might not directly affect your site’s rankings, it is still best to avoid anything that can be used by Google to assign your site a low quality label.

‘Sufficient Reason’

Now, you will come across these two words quite often in the quality guidelines. In some scenarios there could be ‘sufficient reason’ to mark a page down immediately on certain specific areas.

Here we take a look at excerpts from the quality guidelines that specifically relate to these two words:

- An unsatisfying amount of MC (main content) on a page is ‘sufficient reason’ to give that page a low quality rating.

- Low quality MC on a page is ‘sufficient reason’ to give that page a low quality rating.

- Any page that lacks the appropriate E-A-T (Expertise Authority Trust) provides ‘sufficient reason’ to give it a low quality rating.

- Negative reputation is ‘sufficient reason’ to give a page a low quality rating.

According to Google, MC should be the primary reason why a page exists.

Here are some reasons why the MC of your page can be the main cause of your poor quality rating:

- The quality of MC on the page is low.

- An unsatisfying amount of MC is used for the ‘purpose’ of the page.

- There is inadequate information provided about the website.

This will affect the user experience of your visitors and thereby affect your rankings.

Poor quality MC is perhaps the biggest factor for your page being assigned the lowest possible quality rating by Google.

Additionally, if you use pop-up ads that block a major portion of the MC on your page then it contributes towards poor user experience and as a result your rankings will suffer.

Generally, pages that provide visitors with poor user experience will receive low quality ratings.

For example, if a page tries to download malicious content or software’s then, it will be given a low quality rating even though it might contain images that are related to the search query.

If the page is designed poorly or in a way so as to deflect attention away from the MC, it will be given a poor quality rating.

If the design of the page is lacking say in terms of page layout or the use of space in a page that is intended to distract from the MC on the page, it is assigned a poor quality rating.

The following are given most significance in determining whether or not the page is a high quality one after on page content:

Poor secondary content

Here are some reasons why the secondary content on your page might be considered of a low quality level:

- The SC used on the page is distracting or unhelpful because it is intended to benefit the website rather than the user.

- The SC used is distracting or unhelpful for the purpose of the page.

Poor secondary content can lead your page being marked with a low quality rating.

Distracting ads

For example, an ad depicting a model in a bikini might be appropriate if your site is about selling bathing suits.

But if that is not the case, such an ad can be highly distracting for a visitor who has landed on the page for some other reason. Therefore, it affects the quality of user experience your page is providing.

As a result, your site might be handed a poor quality rating by Google.

Poor maintenance

Not maintaining the website properly or updating it on a regular basis could be another reason for a poor quality rating.

SERP sentiment and poor user ratings

If your website has a negative reputation, it could be given a low quality rating even though it might not have malicious software in it or indulge in financial fraud.

This is especially true when it is an YMYL (Your Money Your Life) page.

Lowest rating

You would probably have to do a lot of things wrong simultaneously to be handed the lowest rating.

Throughout the quality document you will come across the phrase “should always receive the lowest rating” quite often.

That is one direction you should look to avoid at all costs for your website.

So, when does Google assign a page the lowest quality rating possible?

The statements mentioned below should give you a fair idea:

Websites or web pages that lack any sense of purpose.

YMYL websites that consists of pages with insufficient or no information about the website.

Websites or web pages that are created to make money while making no effort to help users.

Pages with low quality MC such as a webpage created without any MC. Pages with no MC usually lack any purpose or used for deceptive purposes.

Pages that are intentionally created with bare minimum amount of MC or with MC that is irrelevant and thus unhelpful for a user.

The MC is copied entirely from another source with little or no efforts paid to providing value for users. In such a scenario the page will be assigned the lowest quality rating possible even if the source of content is credited to the original source.

The pages originate from hacked, defaced or abandoned websites.

Pages that consist of content that lacks authority or is considered unreliable, untrustworthy, misleading or inaccurate.

A Page or website that is harmful or malicious in nature.

Websites with a malicious or negative reputation. Proof of fraudulent or malicious behaviour is reason enough for it being granted the lowest rating possible.

Deceptive webpages or websites that ‘appear’ to provide helpful information but, are in fact created for other purposes with the intention to deceive or harm users for personal gain, will be handed the lowest rating possible.

Pages that are designed with the intention of manipulating users into clicking certain links with the assistance of visual design elements such as page layout, link placement, images, font colour etc. will be considered as deceptive page design and thus be penalised with the lowest quality rating.

The pages just do not ‘feel’ trustworthy and appear to be fraudulent in the eyes of Google will be given the lowest quality rating.

Pages that ask for personal information without providing a legitimate reason for the same or websites that ‘phish’ for passwords will be handed the lowest quality rating.

Websites lacking proper maintenance are rated poorly

Your website would be given a low quality rating if your site consists of broken links, images that do not load or content that is outdated or stale.

Non-functioning or broken pages are rated poorly

Google provides clear guidelines on how you can make your 404 pages more useful:

- Make sure that you convey to your visitors that the page they were looking for could not be found in a clear manner.

- Create 404 pages using language that is considered inviting and friendly.

- Make sure that the 404 page you have created retains the same look and feel as the rest of your site.

- Contemplate linking your most popular blogs or articles and also provide a link to your site’s home page.

- Ensure that you provide your user with a way to report any broken links in your website.

- If a missing 404 page is requested, then make sure your web server returns a 404 HTTP status code.

How are pages with error messages or no MC rated?

Google does not index pages that do not have a specific purpose, relevant or inadequate MC.

A well created 404 page along with the proper setup goes a long way in preventing this from happening. Some pages when clicked on load with content that is created by the webmaster but, at the same time display an error message or have missing or insufficient content.

There can be many reasons why a page loads with insufficient content.

For example, if a site has a broken or dead link then, that page does not exist anymore and as a result the page will load with an error message displayed on it.

Now, it is normal for websites to have a few pages that are non-functioning or broken.

But, if you do not take steps to remedy it immediately, those pages will be given a poor quality rating even if all the other pages on the website are of the highest quality possible.

According to Google’s John Mueller, 404 errors on invalid URLs do not affect the ranking or indexing of your site in any way.

We always advise our clients to not ignore broken links on their site and to take remedial steps to deal with such pages properly.

By doing this ‘backlink reclamation’ you reclaim ‘equity’ that you thought you had lost but it still gives your site great value with regards to its rankings.

A lot of broken links flagged by Google are found to be irrelevant and inconsequent with regards to your search engine rankings.

So, it is important to first single out the links that are affecting your rankings and remedy them.

Luckily, finding broken links on your site is relatively easy as there are many SEO tools available to help you with it.

We still believe in the effectiveness of analytics and prefer to use it to look for dead or broken links especially if the site has a history of migrations.

In some scenarios, crawl errors are detected if there are structural issues within your CMS or website.

How can you be sure about it? Easy, by checking the origin of the crawl error, double check if necessary.

If you detect a broken link on your site within your webpage’s static HTML, then it is always advisable to fix it.

If Google algorithms like the content uploaded on your site, it might explore further to discover more great content from your website.

For example, by looking to find new URL’s in JavaScript.

So, if a new URL is tried out but, the URL displays a 404 message then, you miss out on a valuable opportunity to boost your rankings.

The quality guidelines can be a great reference point for you in terms of how you can avoid getting low ratings and potentially avoid any punishments from Google.

Google won’t rank your site if you have low quality pages when it has better options

With exact matches to key search terms, even then those pages might not rank highly in 2018 if it does not have all the remaining important ‘ingredients’.

Usually, Google would prefer sending long tail search traffic like a user searching for something using voice search on their mobile, to pages that offer a high level of quality and clearly explains a topic or concept, its connections with relevant sub-topics rather than, send that traffic to a website with low quality pages just because they have used the exact search phrase on their page.

Identifying dead pages

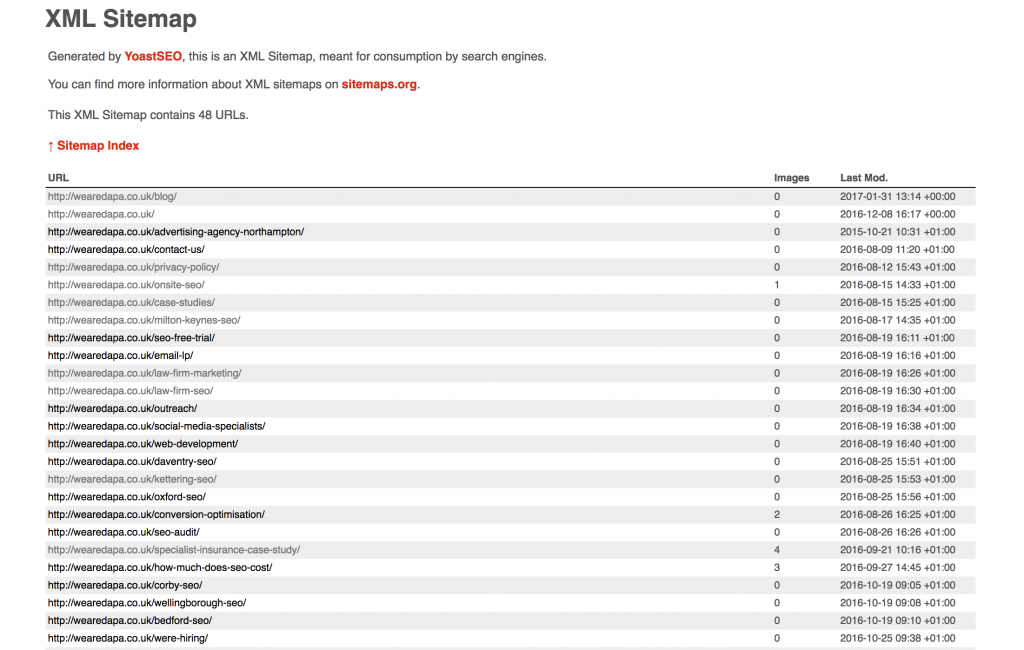

If a large portion of content you have submitted as part of an xml sitemap is being de-indexed by Google, then there might be a problem with your web pages.

How will you find out the pages that are not being indexed by Google? We look to identify the problem using a performance analysis.

This involves assembling data retrieved from a physical crawl of your website with the assistance of webmaster tool’s data and analytics data.

It is possible to identify the type of pages the CMS generates by conducting a content type analysis.

Such an analysis will also help in evaluating how each section of your website is performing.

Let us assume that you have about 1,000 pages of content on your website.

Now, if only 10 out of these 1,000 pages is generating organic traffic for your website in a span of 3 to 6 months, then you can consider the remaining 990 pages of your site as worthless with regards to your SEO goals.

If these pages were of high quality, then they should have been generating traffic for your website. But as they are not doing so, we can safely assume that the quality of these pages is not of the standards that Google desires.

Thus, by identifying pages in your website that do not generate any traffic over a specific timeframe from those that obviously do, you can easily clean up a large chunk of redundant URLs from your website.

Technical SEO tutorial

Here at dapa, we like to put our ‘Google Search Engineer’ hat on when we conduct SEO audits for our clients, as we have found it to be one of the best ways to provide value to our client which could benefit them over a long period of time.

Google has a rather long list of technical requirements that it “advises” any website owner to meet.

Additionally, they also provide a pretty exhaustive list of things you cannot do if you want to rank high on a SERP.

Adhering to all of Google specified guidelines is no guarantee of success, but not following them can be a sure-fire recipe for poor rankings in the long run.

If there is an odd technical issue then it can actually seriously affect your entire site, especially if the issue is rolled out across many pages.

The advantage of following all of Google’s technical guidelines is often secondary.

It might not boost your rankings, but it will prevent your website from suffering major setbacks such as penalties or not getting filtered by Google’s algorithm.

So, if your competition falls, your website rises in the rankings naturally, as a result.

Mostly, your website’s rankings will not suffer due to individual technical issues but, by addressing those issues you can enjoy many secondary benefits.

Also, if your website has not been marked down by Google then it is not demoted and as a consequence it climbs up the rankings.

We believe that the most sensible thing to do in such a situation is to not give Google a reason to penalise or demote your site.

Employing such a strategy might involve investing for the long term but over the years we have found it to be one of the most effective ways to rank on page 1 of a Google SERP.

What is Domain Authority (DA)?

Domain authority (DA) as Moz.com refers to it, is a very important ranking factor.

But, what is DA exactly?

One thing we can be certain of is that there is no ‘trust’ or ‘authority’ rank which Google uses to evaluate the pages on your website.

Page rank can be considered as an important factor that helps build trust about your website in the eyes of Google. It depends on the number of links your website has and the quality of those links.

If your website receives a lot of high quality external links, then it earns trust with Google and is therefore ranked higher.

DA is more about comparing with sites that are already popular, trusted and reputable. Reputable sites usually have a high DA score, with several backlinks of similarly impressive DA.

That is why link building has always been a popular SEO tactic.

Counting the number of links your website has and the quality of those links is usually how third party tools evaluate a website’s DA.

In the past, massive trust and DA ranks were awarded to websites that had gained a lot of links from reputable sources and other online businesses with high domain authority ratings.

At dapa, we associate DA and trust based on the quality, type and number of incoming links a website has.

Examples of trusted domains include the W3C, Wikipedia and Apple.

How can you position your site as an Online Business Authority or OBA?

The answer to that is quite straightforward actually:

By offering a killer online product or service and backing it up with a lot of useful and high quality content.

Sounds simple enough doesn’t it? But executing it can prove to be anything but straightforward.

If you have successfully managed to establish your site as an OBA, then how can you capitalise on it?

There are two ways you can go about it.

You can either turn your site into an SEO black hole (usually applicable for only the biggest brands) or you keep churning out high quality informative content all the time.

Why?

Because Google will always will rank it!

Is it possible to imitate an OBA on a much smaller scale and in specific niches by identifying what it does for Google and why Google ranks it highly?

Yes, but it takes a lot of time and work.

Recreating the same level of service, experience or content even on a much smaller scale can be anything but easy.

Over the years, we have found that focussing on content is the easiest and perhaps the most sustainable way through which a business establishes itself as an OBA in a particular niche.

Along with that systematic link building and promotion of what you are doing can go a long way in helping your website achieve its objectives.

Brands are the solution, not the problem

Creating a brand for your business online will help in distinguishing you from your competition.

If you are a brand in your particular niche, then Google would prefer to rank you over your competition as it would ‘trust’ you to not spam or use dummy content and in the process reflect poorly on Google.

Moz gives a high DA score to websites they trust, which gives them a considerable boost in converting leads into sales.

Now, unless you are creating high quality original content, make sure that you create content that is specific to your topic.

Also, if your website gets links from sites that are thought of as brands in their respective niche then it counts as a quality backlink and will boost your rankings.

It is easier said than done but that should be the focus of your SEO strategy.

You should always look to establish your main site as an online brand.

Does Google have a preference towards bigger brands in organic SERP’s?

Er, yes.

There is no denying that bigger brands have an advantage over smaller upcoming businesses in Google’s ecosystem.

Also, Google can try to “even” out this disparity by encouraging smaller brands to pay more on Google Adwords.

However, a small business can still achieve success online as long as they focus on a long term strategy prioritising depth and quality with regards to how the content is structured on the website.

How important is domain age as a Google ranking factor?

No, not really. For example, if your website is hosted on a ten year old domain that Google has no idea about then it is similar to having a brand new domain.

On the other hand, if your website is hosted on a domain that has been continuously cited year on year and as a result developed a reputation of trust and authority then it is very valuable in terms of your SEO techniques.

But again, it is important to remember that the ranking benefits you might enjoy as a result of hosting your website on a reputed and trusted domain are not solely because of the ‘age’ of your website. Therefore, the focus should always be on creating great content and having high quality links to your site.

A year old domain cited by reputed and authoritative sites is probably more valuable in terms of your search engine rankings when compared to a ten year old domain with no search performance history and no links.

We also believe that without first considering ‘ranking conditions’, you cannot discover ‘ranking factors’ for your website. Other important ranking factors include:

- Domain age, not on its own though.

- The amount of time you have registered your site domain for. Again on its own this should not be thought of as a vital ranking factor. It is common knowledge that valuable domains are sometimes paid for many years in advance whereas backdoor or illegitimate domains are seldom used for more than a year. Paying many years in advance for a domain is usually just a method employed to prevent others from using that domain name and does not in any way signify that you are doing something that is very valuable in the eyes of Google.

- The domain registration information, especially if it was kept hidden or anonymous.

- TLD or top-level-domain of your website. TLD is the last segment of your domain name which follows the final dot in your website’s internet address. For example, .com versus .co.uk or .com versus .info. TLD helps to identify what your website is associated with such as, the purpose of the site or organisation that runs it or the geographical area from where it originates.

- Root domain or sub domain of your website.

- Domain past owners. For example, how often the owners have changed for a particular domain.

- Domain past records. For example, how often the IP has changed for a particular domain.

- Keywords used in the domain.

- Domain IP

- Domain IP neighbours.

- Geo-targeting settings in Google Webmaster Tools.

Google penalties for Black Hat SEO techniques

In 2018, you need to be more careful about the methods you employ to boost your SEO rankings.

You might run the risk of falling under Google’s radar if they smell any foul play, which can increase the chances of penalties.

The Google web spam team are currently on the warpath in terms of identifying sites that use unnatural links along with other tactics that intend to manipulate the SERP rankings.

Google is trying to ensure that it takes longer to achieve results from black and white hat SEO optimisation techniques and also remains intent in creating a flux in its SERPs, based on the location of the searcher at the time the search query is typed and whether or not a business is located near the searcher.

To improve your rankings, there are still some factors that you cannot influence legitimately without risking a penalty.

While saying that, it is still possible to drive greater traffic to your website using many alternative techniques.

Ranking Factors

Google literally considers a hundred different ranking factors with signals that can change on a daily, monthly or yearly basis to help it sort out where your page should rank in comparison to other competing pages on a SERP.

Many ranking factors are either on site or on page while others are off site or off page.

Some ranking factors are based on where the searcher is located at the time of typing the search query or depends on the search history of the searcher.

At DAPA, we use our experience and expertise to focus on strategies that provide the best Return on Investment for your efforts.

Learn SEO basics

If you are starting out it is important to not be too brash with your SEO strategy believing that you can deceive Google or its algorithms all the time.

It is best to keep your strategy basic and focus on the E-A-T (Expertise Authoritativeness Trustworthiness) mantra that Google keeps advocating.

Direct your energy into ensuring a great user experience.

Always, always use SEO techniques that fall within Google Webmaster Guidelines.

Be clear right at the outset about the SEO company tactics you are going to employ and then stick to it patiently.

This will ensure that you avoid getting stuck in the midst of an important project.

If your objective is to deceive the visitors who land on your site through Google, then remember that Google is not your friend.

Google will send a lot of free traffic your way, if you manage to reach the top of a SERP. So, following Google recommended guidelines can be very beneficial.

There are a whole lot of SEO techniques that are effective in terms of boosting your site’s rankings in Google but are against their specified guidelines.

For example, many links that at one time helped your site reach the top of a SERP might today be hurting your site and its chances of ranking high on a SERP.

Keyword stuffing is one such black hat SEO technique that might be holding your page back from achieving the desired ranking on a SERP.

Link building should be done in a smart and careful manner, such that it does not put you on Google’s radar, thereby reducing the likelihood of your site being penalised.

Do not expect instant results or to rank high on a Google SERP without first putting in the necessary amount of investment and work.

You do not have to pay anything to get your site into Google, Bing or Yahoo. Major search engines will discover your website pretty quickly by themselves within a few days.

This process can be made easier if your Content Management System or CMS, ‘pings’ search engines whenever content is updated on your site. For example, using RSS sitemaps or XML to do so.

The eternal importance of backlinks

Apart from how relevant your website is, Google will rank it based on the number of incoming links to your site (amongst hundreds of other metrics) from external sites and the quality of those incoming links.

Usually a link from another site to your own site acts as a vote of confidence for your webpage in the eyes of Google.

Now as the page continues to receive a high number of links, it earns more trust in the eyes of Google, which translates into higher rankings for your page.

If a highly reputable site links to your webpage, then you get a quality backlink.

The amount of trust Google has on the domain of your website will also affect how it will be ranked. Backlinks from other websites is perhaps the most crucial part of how your site will be ranked on a SERP and it trumps every other ranking factor that is considered by Google.

High quality content produces high quality results

One of the easiest ways to rank quickly is by creating original high quality content. Search engines always tend to reward websites that provide original content – the kind which has not been seen before.

For starters, these pages are indexed very quickly. Ensure each of your pages has sufficient text created specifically for that page.

This way you can get your pages ranked quickly without having to jump through hoops to do so. If the content on your website is original and of a high quality, it is possible to generate quality inbound links or IBL to your site.

Now, if the content used on your website is already available on other platforms, you will find it difficult to get quality inbound links, as Google prefers variety in its results.

Also, if you use original content of a reasonably high level of quality, you can then promote your content to websites who have online business authority.

If a highly reputable site links to your webpage, then you get a quality backlink.

Talking about trust…what about distrust?

Now if a lack of trust is perceived by Google towards your website, then it can reflect negatively on your SEO efforts.

Another way through which search engines can find your website is if other websites are linking to it.

It is also possible to directly submit your site to search engines, but it is probably not necessary for you to do that.

Instead it would be more worthwhile to register your website with Google webmaster tools. Bing and Google use a crawler known as bingbot and Googlebot respectively, that spider the web to look for new links.

These bots might find their way to the link to your homepage and then index and crawl through the pages of your website as long as all the pages on your site are linked together.

If your site has an XML sitemap, then Google bots will use that to index all the pages on your website.

A widely held belief amongst many webmasters is that Google does not permit new websites to rank high in for competitive searches until your web address ‘ages’ and acquires trust in the eyes of Google.

Some misconceptions and unknown facts

Sometimes your website might rank high on Google SERPs instantly and then disappear for months. Think of this is as your honeymoon period with Google. When Google bots crawl the pages on your website and index them, your website gets classified by Google.

This classification can have substantial impact on your rankings. Ultimately Google wants to figure out what the intent of your website is.

It wants to know whether you created a website with the sole purpose of it being an affiliate site for Google, a domain holding page or a small business with a definite purpose.

These days it is important that your website conveys that yours is a legitimate business with clear intent and more importantly a website looking to satisfy their customers, if you want it to rank highly on Google SERPs If a page is created solely for the purpose of making money by benefiting from free traffic provided by Google, then such a page could be classified as spam, especially if the content is ‘thin’.

Ensure that the textual content you use on your website and the links provide transparency to Google about the type of business you are, how you function and how you are rated online as a business.

Google will rate your website based on this transparency that you provide on your website.

Using keywords, not just links, to bump up your SEO

If you want to rank for a particular ‘keyword phrase’ search, you must include the keyword phrase or relevant words in your content or have links that point to your website or webpage.

While it is not necessary that you use all the words in the keyword phrase together in your content, it is still generally advisable that you do so.

Ultimately, the steps you might have to take to compete for a specific keyword could depend on what your competition is doing to rank for that keyword.

If you cannot come up with a unique SEO strategy that assists you in leapfrogging your competition, you will have to at least rival how hard they are competing to stand a chance.

If quality external sites are linking to your website, it assists you in having a specific amount of ‘real’ PageRank that is shared amongst all the internal pages in your website.

In future, this will assist you in providing a signal to where a page on your website ranks on Google.

To achieve a more significant PageRank you will need to build a higher number of quality links (or Google juice) to your website, also known as trust or domain authority these days.

Let’s talk a bit about Google and its fiddly algorithm

Google is a link based search engine. Google algorithms cannot actually tell whether the content uploaded on your website is good or quality content.

However, Google understands if the content uploaded is ‘popular’ or not. Also, it recognises poor or inadequate content and can penalise a website for it.

How? By simply making an algorithm change that can take away all the traffic the website once enjoyed.

Any queries sent to Google enquiring about this sudden drop in rankings will be answered with a simple “our engineers have gotten better at detecting poor quality content and unnatural links” kind of reply.

If you have purchased links for your website and been penalised for it by Google, then it is termed as ‘Manual Action’ and you will be notified about it once you sign up in Google webmaster tools.

Website optimisation

For onsite optimisation, try to link the pages with main content text to other pages in the website. And remember, if you get a backlink too, one link from a website with a very high DA is much better than five links from a website with a shoddy DA.

However, we strongly advise you to do this only if you have pages that are relevant to the main content of the original page.

Usually we try to link pages only when there is a keyword in the title element of both the pages. We strongly advise against using auto generating links.

In fact, Google has penalised sites in the past for using auto link plugins.

Therefore, it is best to stay away unless you want to fall on Google’s radar. If you want to rank high for a specific keyword or phrase, then you can try to link to a page with the exact key-phrase in the link.

This technique can help you boost your rankings across all search engine platforms.

However, it is important to remember that post 2016, Google is looking to aggressively penalise optimisers who are using manipulative anchor text.

Look to be sensible and try to stick to plain URL links or brand mentions that will assist you in building brand authority for your website in a less risky manner.

How to insert links naturally within your content and why

Anchor text links in internal navigation can still provide value to your website from an SEO expert perspective as long as you try to keep it natural.

Google needs to find links so that they can categorise the pages on your website.

The SEO value of a cleverly placed internal link should never be underestimated. However, ensure that you do not overdo it.

Too many links on a webpage can contribute towards poor user experience which can in turn offset all the benefits you were getting and perhaps even setting you back more than what you were gaining in the first place.

How Google interprets those links

Similar to how a human visitor will click on links to open new pages, a search engine like Google ‘crawls’ through all your pages by following all the links you have inserted into your website.

Once the pages are ‘crawled’, it then gets indexed and usually within a few days those pages will be returned on a SERP.

Once Google knows all there is to know about your website, the pages that are deemed to be ‘useful’ from Google’s perspective are retained.

Useful pages retained by Google are usually those that consist of high quality original content or have a lot of high quality inbound links.

All the remaining pages are de-indexed. Too many low quality pages on your site could affect the overall performance of your website in Google SERPs.

Ideally you will have pages on your website that are ‘unique’ and stand out from your competition. This means that the page titles, content and meta-descriptions used in your web pages are all unique.

Remember, Google might not use meta descriptions when ranking for certain specific keywords if they are deemed to be not relevant.

If you are not careful, spammers might use your original content for their own website once they scrape off your descriptions and insert the text as main content in their own website.

There is no point in using meta keywords these days because Google and Bing have both gone on record to state that they consider it as spam.

The analysis of your website will take some time as Google examines all the text content and links used in your website.

Keyword stuffing

You do not have to keyword stuff your content to rank high on a Google SERP. You can optimise the pages of your website for more traffic by increasing the number of times the ‘key phrase’ (and not the keyword) is repeated along with synonyms in links, co-occurring keywords, text titles and page content.

There is no magic formula which states that you have to use ‘x’ amount of text in your content or your keyword density ideally should be ‘y’ amount, to get you more traffic for your website.

The priority should be to include the key phrase in the content naturally and to give your users the best possible user experience.

Ideally you should use a number of relevant unique words on your webpage so that it satisfies as many long tail search queries as possible.

How you link your content is really important.

I know we’ve said it over and over. The linking techniques that you employ will often be considered by Google as a means to classify your website. For example, ‘affiliate’ sites are no longer effective in Google these days unless it has high quality of content and backlinks attached to it.

If you link the content of your website to low quality or irrelevant websites, there is a possibility that it too will be ignored by Google. But this could depend on which website you are linking your content to, or more importantly ‘how’ you link your content.

Most SEO experts believe that who you link to (and who links back to you) is very important in determining a hub of authority for your website.

It is very simple really.

You want your website to be in that hub, ideally in the centre.

As Google gets even better at determining the ‘topical relevancy’ of content in a webpage, how well you link out your pages will play a crucial role in how a particular page is ranking on a SERP.

Traditionally the practice was to include links to external websites on single pages which are deeper within a website’s architecture and not on higher or main pages (home page, product pages etc.) of a website.

The benefit of such a strategy is that your main pages soak up most of the ‘Google juice’ which means that greater traffic will be observed on your more important pages.

This tactic can be thought of as old school, but if done correctly they can still be very useful for your site.

‘Content is king’

You have probably find this phrase repeated often, sometimes annoyingly so, but the bottom line is high quality content will help your website attract what Google terms as ‘natural link growth’.

Too many incoming links to your site within a short span of time can lead to site being devalued by Google. It is best to err on the side of caution and aim for diversity in the links.

This makes it more ‘natural’ in Google’s eyes which mean that there less chances of your page being ignored or penalised in the future.

In 2018, strive for natural and diverse links to rank high in Google SERPs.

Google’s criteria

Google might devalue template generated links, individual pages, individual links or even whole websites if it considers them to be ‘unnecessary’ or an important factor that contributes to ‘poor user experience’ for visitors.

Google looks out for who you are linking out to, who is linking to your website and the quality of those links.

These are important factors (amongst other factors) that ultimately establish where a page on your website should rank on a Google SERP.

What could add to the confusion is that the page which is ranking high on Google might not be the page you want to rank well or it might not even be a page that determines how you will be ranked on a Google SERP.

Once Google establishes your website’s domain authority, the page it finds most relevant or the page with which it has no issues will rank well.

Work on establishing links to your important pages or by making sure that at least one page is well optimised compared to other pages on your website for the most important key phrase. It is important to remember that Google does not want to rank ‘thin’ pages on your website in its SERPs.

So, if you want a particular page on your website to rank well, make sure that it has all the things that Google is looking for which these days are an ever increasing list.

Algorithm updates

Every few months Google updates its algorithms to penalise sites that are manipulating SERP rankings or using sloppy optimisation techniques.

Google panda and penguin were two such updates made by Google to its algorithms.

The true art of SEO is to rank well without being penalised by the ever-evolving Google algorithms, or without being flagged by a human visitor. As you might have probably guessed, that’s very tricky and not at all straightforward.

Remember the web is constantly changing and a fast site contributes towards great user experience. Ensure that you prioritise improving your websites download speeds at all times.

There is no denying the fact that every webmaster has to walk a tightrope these days to optimise their website for search engines.

Read on if you would like to find out how not to topple off the tightrope and be successful in modern web optimisation.

The importance of keyword research

Before you start an SEO campaign, the first step you should take is doing the relevant keyword research and analysis.

Here’s a great video to help:

It is perhaps the ‘whitest’ of white hat SEO techniques, a hundred percent Google friendly and will always guarantee you positive results if done correctly.

The chart above shows a page which was not ranking well for a long time, but one we believed that could and perhaps should rank well in Google by just using this simple ‘trick’.

This is possibly the most basic illustration we can provide of a crucial aspect of on page SEO, a technique that is 100% white hat and one that will never ever get you in the docks with Google.

This technique or trick should work for any key phrase on any website. However, the results might obviously differ based on the quality of your content, the user experience your page is providing and the strength of competing pages in Google SERPs.

Let us just clarify that we did not use any SEO gimmicks like redirects or any hidden technique to get the rankings of the page to go up.

Nor did we add a keyword, key phrase or internal links to the page to give the rankings a boost.

But as you can see from the chart there is no denying the fact that the technique we used delivered positive results.

Anybody can profit from this technique so long as there is an understanding as to how Google works or seems to work, nobody can be absolutely certain about how Google works anyway these days.

So, you are probably wondering what exactly we did to get such an upturn in our rankings, aren’t you?

It is very simple actually, we just did some keyword research and added one ‘keyword’ to the content without contributing to the actual keyword phrase itself (as that can be termed as keyword stuffing).